Dan Cobley, Google UK’s Managing Director, recently revealed that Google’s infamous 2007 “50 Shades of Blue†experiment involving ad links in Gmail increased revenue by $200 million a year. These results switched the balance of power from design-driven to engineering data-driven decisions, and famously led Google’s top designer, Doug Bowman, to ultimately to resign in frustration.

Search professionals have since observed and reported hundreds of UI experiments, and the search engine results page has gone through dozens of iterations since the days of 10 blue links.

Universal Search, embedded local with the 3-pack, 5-pack and 7-pack variations, rich snippets, authorship, the knowledge graph, and of course the carousel have all become part of the SEM lexicon. You can be confident that all of these changes were A/B (or multivariate) tested and vetted against some conversion goal.

So what does this have to do with SEO?

How Does Google Measure A Conversion From Organic?

If every change to the presentation layer is driven by conversion optimization, it is reasonable to assume that organic rankings are also informed by the same approach. The dilemma is that we do not know what criteria Google is using to measure a “conversion†out of organic results.

The first concrete example of Google using user data to influence SERPs appears in 2009, when Matt Cutts revealed that Google site links are partially driven by user behavior. This tidbit surfaced during a site clinic review of Meijer.com at SMX West when he noted that their Store Locator is buried in their primary navigation, but that it is a popular page “because it appears in your site link.â€

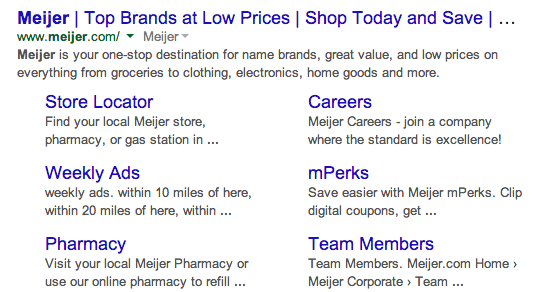

The Meijer Store Locator is the top choice in the site links, despite the fact that it is still buried in the global navigation and the page is essentially devoid of content.

A quick look at the AdWords Keyword Tool shows that “meijer locations†is the 12th most popular query, well below “ad†(#2) “weekly ad†(#8), “mperks†(#4), and “pharmacy†(#6). Even if you were to group these keywords by landing page, it is difficult to imagine behavior in the SERPs that would elevate the Store Locator to the top of the sitelink.  The only thing maintaining the prominence in the sitelink appears to be user behavior after the query.

The Big Brand Bailout

The second confirmation of user behavior affecting ranking came with the Big Brand Bailout which was first observed in February of 2009: big brands started magically dominating search results for highly competitive short-tail queries. Displaced site owners (many with lead gen sites) screamed in protest as sites with fewer backlinks suddenly vaulted over them. Google called this update Vince; @stuntdubl called it the Big Brand Bailout, and that is the name that stuck.

Hundreds of pundits suggested how/why this happened. Eventually, Mathew Trewhella, a Google engineer who was not yet trained in the @MattCutts School of Answering-Questions-Without-Saying-Anything-Meaningful, slipped up and revealed during a SEOGadget QA session that:

-

Google is testing to find results that produced the least subsequent queries, from which we conclude subsequent queries are a “conversion failure†when testing organic results. Matthew said the Vince update was about Google minimizing the number of times people have to search to find the products or information they are looking for. Every time a user has to perform a second search, Google regards it as their failure for not bringing up the right result the first time.

-

Google is using data on users’ subsequent query behavior to improve SERPs for the initial query and elevating sites for which users are indicating intent later in the click stream. In other words, Google is using user behavior to disambiguate intent and influence rankings on the original query in an attempt to improve conversions.

Learning From Panda

The third example of user engagement data affecting search results came with Panda. Many parts of the Panda update remain opaque, and the classifier has evolved significantly since its first release. Google characterized it as a machine learning algorithm and hence a black box which wouldn’t allow for manual intervention.

We later learned that some sites were subsequently added to the training set as a quality site, thus causing them to recover and be locked in as “good sites.† This makes it especially hard to compare winners and losers to reverse engineer best practices. What most SEO practitioners agree upon is that user behavior and engagement play a large role in the site’s Panda score. If users quickly return to the search engine and click on the next result or refine their query, that can’t be a good signal for site quality.

What does Google’s conversion testing mean for SEO?

Putting These Learnings To Use

Google’s announcements are often aspirational and seemingly lack nuance. They tell us that they have solved a problem or devalued a tactic and SEOs quickly point to the exceptions before proclaiming the announcement as hype or FUD. Years later, we look around and that tactic is all but dead and the practitioners are toast (unless the company is big enough to earn Google immunity). These pronouncements feel false and misleading because they are made several iterations before the goal is accomplished. The key to understanding where Google is now is to look at what they told us they were doing a year ago.

What they told us 18 months ago is that the Search Quality Team has been renamed the Knowledge Team; they want to answer people’s search intent instead of always pushing users off to other (our) websites. Google proudly proclaims that they do over 500 algorithm updates per year and that they are constantly testing refinements, new layouts and features.

They also allude to the progress they are making with machine learning and their advancing ability to make connections based on the enormous amount of data they accumulate every day. Instead of the Knowledge Team, they should have renamed it the Measurement Team, because Google is measuring everything and mining that data to understand intent and provide users with the variation they are looking for.

What does this mean to site owners?

Matt Cutts told us at SMX Advanced in 2013 that only 15% of queries are of interest to any webmaster/SEO anywhere. Eighty-five percent (85%) of what Google worries about, we actually pay no attention to. An update that affects 1.5% of queries can affect 10% of queries some SEO somewhere cares about and 50% of the top “money terms†on Google.

Simultaneously, Google tends to roll out changes and then iterate them. The lack of screaming protests or volatile weather reports suggest that very few results actually changed when Hummingbird was released — at least, results you can view in a ranking scraper. Instead, Google rolled out the tools they need to make the next leap in personalization, which will gradually pick winners and losers.

A Third Set Of Signals

SEO has long focused on onsite and offsite ranking signals, but the time has come to recognize a third set of signals. Google conversion testing within the SERPs and user interaction signals are becoming more and more important to organic ranking. Let’s call this third set Audience Engagement Signals.

The good news is that this paradigm provides a significant chance for onsite changes to improve performance and generate strong positive Audience Engagement Signals. Machine Learning is data driven and Audience Engagement Signals, like clicks, shares, repeat visits and brand searches, are measurable user actions. Site owners that embrace user-focused optimization and align their testing goals with what we can reasonably infer to be Google conversion metrics (instead of our own narrowly defined conversion goals) are likely to improve audience engagement.

That is how to optimize your SEO strategy for now, for next year, and for the foreseeable future.

Opinions expressed in the article are those of the guest author and not necessarily Search Engine Land.

Related Topics: All Things SEO Column | Channel: SEO | Google | Google: Algorithm Updates

Article source: http://searchengineland.com/optimizing-seo-strategy-2014-beyond-183806