Search engines trawl the web, sifting through billions of data points to serve up information in a fraction of a second. The access to instant information we’ve come to take for granted is based on an enormous system of data retrieval and software.

Google has been the most forthcoming about how its search engine works, so I’ll use it as an example.

At the simplest level, search engines do two things.

- Index information. Discover and store information about 30 trillion individual pages on the World Wide Web.

- Return results. Through a sophisticated series of algorithms and machine learning, identify and display to the searcher the pages most relevant to her search query.

Crawling and Indexation

How did Google find 30 million web pages? Over the past 18 years, Google has been crawling the web, page by page. A software program called a crawler — also known as a robot, bot, or spider — starts with an initial set of web pages. To get the crawler started, a human enters a seed set of pages, giving the crawler content and links to index and follow. Google’s crawling software is called Googlebot, Bing’s is called Bingbot, and Yahoo uses Slurp.

When a bot encounters a page, it captures the information on that page, including the textual content, the HTML code that renders the page, information about how the page is linked to, and the pages to which it links.

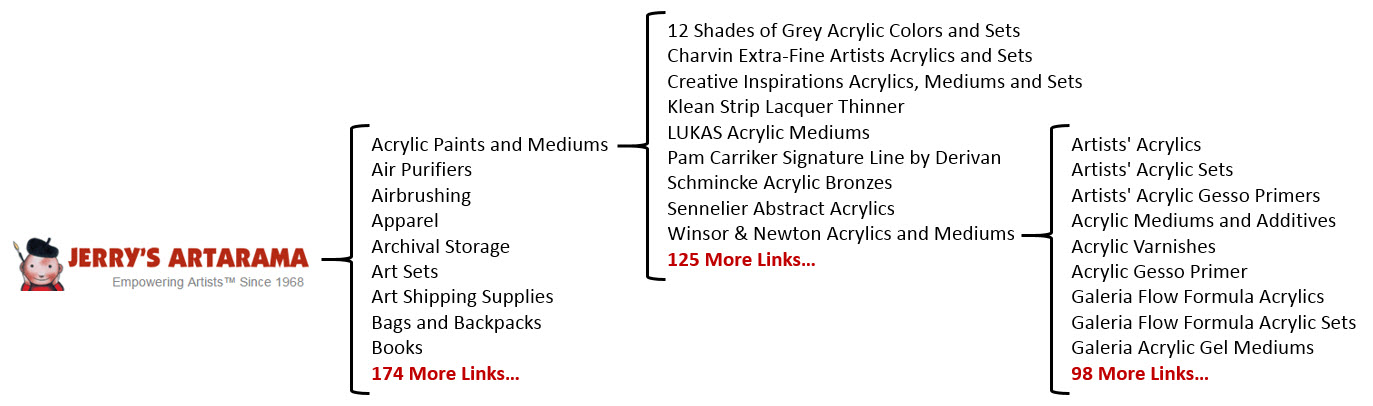

As Googlebot crawls, it discovers more and more links. The image below shows a very simplistic diagram of a single, three-page crawl path on Jerry’s Artarama, a discount art supplies ecommerce site.

An example of a simple crawl path on Jerrysartarama.com.

The logo at left indicates a starting point at the site’s home page, where Googlebot encounters 184 links: the 10 listed and 174 more. When Googlebot follows the “Acrylic Paints and Mediums†link in the header navigation, it discovers another page. The “Acrylic Paints and Mediums†page has 135 links on it. When Googlebot follows the link to another page, such as “Winsor Newton Acrylics and Mediums,†it encounters 108 links. The example ends there, but crawlers continue accessing pages via the links on each page they discover until all of the pages considered relevant have been discovered.

In the process of crawling a site, bots will encounter the same links repeatedly. For example, the links in the header and footer navigation should be on every page. Instead of recrawling the content in the same visit, Googlebot may just note the relationship between the two pages based on that link and move on to the next unique page.

All of the information gathered during the crawl — for 30 trillion web pages — is stored in enormous databases in enormous data centers. To get an idea of the scale of just one of its 15 data centers, watch Google’s official tour video “Inside a Google data center.â€

Â

As bots crawl to discover information, the information is stored in an index inside the data centers. The index organizes information and tells a search engine’s algorithms where to find the relevant information when returning search results.

But an index isn’t like a dark closet that everything gets stuffed into randomly as it’s crawled. Indexation is tidy, with discovered web page information stored along with other relevant information, such as whether the content is new or an updated version, the context of the content, the linking structure within that particular website and the rest of the web, synonyms for words within the text, when the page was published, and whether it contains pictures or video.

Returning Search Results

Results are displayed after you search for something in a search engine. Every web page displayed is called a search result, and the order in which the search results are displayed is known as ranking.

But once information is crawled and indexed, how does Google decide what to show in search results? The answer, of course, is a closely guarded secret.

How a search engine decides what to display is loosely referred to as its algorithm. Every search engine uses proprietary algorithms that it has designed to pull the most relevant information from its indices as quickly as possible in order to display it in a manner that its human searchers will find most useful.

For instance, Google Search Quality Senior Strategist Andrey Lipattsev recently confirmed that Google’s top three search ranking factors are content, links, and RankBrain, a machine learning artificial intelligence system. Regardless of what each search engine calls its algorithm, the basic functions of modern search engine algorithms are similar.

Content determines contextual relevance. The words on a page, combined with the context in which they are used and to pages they are linked to, determines how the content is stored in the index and which search queries it might answer.

Links determine authority and relevance. In addition to providing a pathway for crawling and discovering new content, links also act as authority signals. Authority is determined by measuring signals related to the relevance and quality of the pages linking into each individual page, as well as the relevance and quality of the pages to which that page links.

Search engine algorithms combine hundreds of signals with machine learning to determine the match between each page’s context and authority and the searcher’s query to serve up a page of search results. A page needs to be among the top seven to 10 most-highly-matched pages algorithmically, in both contextual relevance and authority, to be displayed on the first page of search results.

Article source: http://www.practicalecommerce.com/articles/98271-SEO-How-Search-Engines-Work