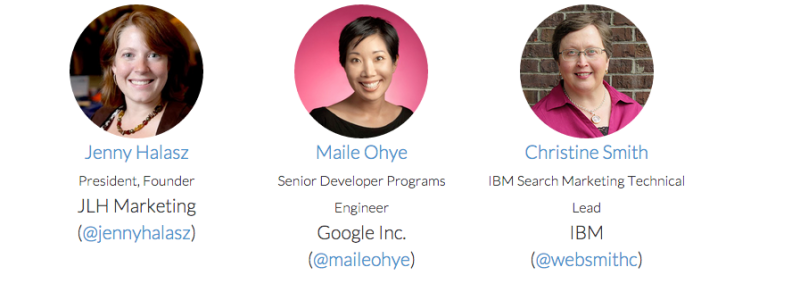

Today, I’m bringing you the latest scuttlebutt in Advanced Technical SEO, from a session at SMX Advanced moderated by Barry Schwartz with presentations from the following industry veterans:

- Jenny Halasz, President and Founder of JLH Marketing

- Christine Smith, Technical Lead at IBM Search Marketing

- Maile Ohye, Engineer with Google’s Senior Developer Programs

I believe the majority of technical SEO involves many of the same, basic best practices that have been established for some years. The “advanced†portion of technical SEO is often to be found in the nooks and crannies around the edges — it’s in the extreme extents of large enterprise e-commerce site development, or it’s in exception cases and poorly-defined situations.

But that’s not always the case, as you’ll see below in some of the highlights of the material I’m covering.

Maile Ohye, Senior Development Programs Engineer, Google

Maile Ohye was up first, and she did her usual thorough and technically elegant job covering a few topics that she and her team at Google wanted to evangelize to the advanced audience.

HTTP/2

Her first topic was HTTP/2, and she started by walking through a bit of internet evolution history — relating how the first version of internet networking protocol (HTTP “1.0â€) was simplistic, designed for webpages that had relatively few external assets, and discussing how the earlier browsers sequentially downloaded webpage assets.

Fast-forward to the present, and webpages frequently have 50+ resources, which HTTP 1.x can’t handle. Such situations sparked all sorts of workarounds to improve performance, like graphic sprites, concatenating files, etc.

Maile explained how HTTP/2 has a number of benefits over the earlier version. It supports infinite parallel resource requests, prioritized resource fetching (such as for content appearing above-the-fold), and compressed HTTP headers. Most major, modern browsers now support HTTP/2 (Google’s Chrome browser, the company has announced, will completely move to HTTP/2 by 2016, and they’re dropping support of the earlier non-standard SPDY protocol). For a site to leverage HTTP/2, its servers must be upgraded to the new protocol.

Now, Maile didn’t at all state that HTTP/2 conveys SEO benefit. But, the takeaway involving this is implicitly obvious: Google has pushed forward elements and signals involving quality and user experience, including page speed. The HTTP/2 protocol improves how quickly browsers may receive a webpage’s contents, and how quickly a webpage will be able to render in browser windows.

Since this protocol improves performance, one can imagine that in the future, Google could declare that HTTP/2 support will be a ranking factor. But, even if Google doesn’t overtly announce it to be a ranking factor, it could easily benefit the “Page Speed†ranking factor to some degree, becoming a de facto ranking factor.

HTTPS

Maile went on to evangelize the HTTPS protocol, explaining why it matters, and noting how some of the largest, most popular sites/services on the internet (like Twitter, Facebook and Gmail) have moved to HTTPS, indicating how its importance is now widely recognized.

(As an aside, the slightly curmudgeonly cynical crank in me has been mildly amused that Google first pushed Page Speed as a ranking factor out of a desire to speed up the internet, and now they are pushing HTTPS, which has the potential to slow down the internet as encrypted contents drive up the size of files transferred and interpreted. And, it has now launched mobile friendliness as a ranking factor for mobile search, but it’s also now encouraging HTTP/2, which results in more parallel requests which can glut mobile networks.

But, I’ll say that realistically, HTTPS only increases bandwidth fairly negligibly in most cases, and newer generation networks increasingly handle parallel requests well. Also, from seeing how Google assesses Page Speed, it basically ignored a major piece of internet speed: how long the data takes to reach you from the server. The ranking factor seems primarily based on how much data is passed and how quickly stuff renders in the browser. If you design your pages well for desktop or mobile, you eliminate much of the basis for my crankiness!)

I won’t detail the steps Maile provided for converting a site from HTTP to HTTPS, since there are many sources for this. However, it’s worthwhile to mention that, according to Maile, only a third of the HTTPS URLs that Google discovers become canonical due to inconsistent signals for HTTP/HTTPS — webmasters are pretty sloppy about this. She suggests that you refer to the Google documentation for moving a site from HTTP to HTTPS.

Rendering Of Webpages

Maile went on to provide some commentary around Google’s rendering of webpages — Google is increasingly sophisticated about interpreting the page content to “see†how it would lay out for desktop users and mobile users — they want to be consistent with how end users see pages. She recommends that you be aware of this, and understand that hidden content or URLs that are accessible by clicks, mouseovers or other actions is discoverable by Google, and they will crawl that content they find.

If you have resources embedded in a page, beware of lots of page assets — page resources are crawled by Googlebot or cached from prior crawl and may be prioritized in the crawl queue equivalently to product or article pages. The URLs found through rendering can be crawled and can pass PageRank. Hidden content will be assessed as having lower priority on a page, versus content that’s immediately visible on pageload or content found above the fold.

Finally, if your mobile-rendering CSS is inaccessible to Google due to robots.txt or somesuch, then they will consider the page to be non-mobile-friendly.

Jenny Halasz, President And Founder Of  JLH Marketing

Jenny was up next, and she ran through a number of elements that she has encountered and diagnosed in working on SEO performance issues for websites. She categorized some signals used by Google as either “Definitive†or “Not Definitive†when trying to isolate and diagnose issues — such signals indicate to Google whether you know what you’re doing or not.

Definitive Signals

For Definitive signals, she lists 301 redirects, page deletions (400 server status responses), robots.txt and the noindex parameter. She notes that a 301 redirect is not always definitive. She noted that while a 404 “Not Found†error page is almost definitive, it may be recrawled some to be sure, and that a 410 response is apparently more definitive since it conveys that a page or resource is permanently gone.

She noted that the noindex parameter must be assessed on a page-by-page basis, that each page’s links are followed unless nofollowed, and that this merely signals not to include the page in the index, while link authority still flows.

Robots.txt can tell the search engine not to crawl the page, but link authority is still passed, so the page may still appear in the index — she points out Greg Boser’s experiment with disallowing crawling of his site (http://gregboser.com), and the homepage still appears in the search engine results for some queries, albeit without a description snippet.

Non-Definitive Signals

For me, Jenny’s list of Non-Definitive Signals was more interesting, because with ambiguity comes a lot more uncertainty about how Google may interpret and use the signals. Here’s her list:

- rel=canonical: the name/value parameter is supposed to convey that there’s one true URL for a page, but alternate URLs can still get indexed due to inconsistencies in a site’s internal links, noncanonical external links, and inconsistent redirects.

- rel=next/prev: these two parameters are supposed to help define a series of pages, but once again, there can be inconsistencies in internal/external links that cause incorrect URL versions to be indexed, or for pages to fail to be crawled/indexed. Incorrect redirects and breaks in the pagination series can also be to blame.

- HREF Lang rel=alternate: this is supposed to indicate language preference, but one is supposed to specify one of two options — either specify just the language, or else the language plus the region — you cannot specify region alone. The links must correspond between two or more alternate language versions of a page — the links on the English page must specify the English and French page links, for instance, and the French version page must also specify the same English and French page links.

- Inconsistent Signals: other inconsistent signals include needing to have your Sitemaps URLs match up with what you use elsewhere, such as the canonicals; your main navigation links should match what’s used elsewhere; other internal links should be consistent; self-referential canonicals — she recommends against using, but if they are, they should be correct and consistent.

Jenny went on to cite a number of common pitfalls in URL consistency: redirects/rewrites of URLs that don’t sync with how the URLs are used elsewhere; inconsistency in using WWW or not; inconsistency in handling HTTP/HTTPS (or, allowing both page versions to exist in the index); inconsistent handling of trailing slashes in URLs; breadcrumb links not using proper canonical links; and, having parameters that have been disallowed in Webmaster Tools.

Jenny provided one unusual example case of a page that had been 301 redirected, yet the URL still appeared in the Google index — why? She theorized it was due to inconsistency in how the URLs were cited by the website.

Maille responded at this point to state that Google doesn’t always consider 301s to be authoritative — that there can be some edge cases where the original URLs may be deemed to have greater authority, such as if a homepage is being redirected to a login page. She also, surprisingly stated that one shouldn’t trust the “site:†search operator, because it wasn’t always indicative of the actual state of the index. (!!!)

Christine Smith, IBM Search Marketing Technical Lead

Next up was Christine Smith, whose presentation, “Tales of an SEO Detective,†covered three different issues she’d helped to investigate at IBM (serving as case studies for the audience).

Case #1

In her first case, she related how traffic from Google to one of IBM’s self-support sites had abruptly and mysteriously dropped by many thousands of pages. It was a drop of about 28%, and it happened just as they were going into a holiday season.

It quickly became apparent, however, that the usage drop wasn’t due to seasonal traffic patterns; it resulted in a spike in support phone calls as people were no longer able to search by error codes or issue descriptions to find solutions to their technical problems.

She walked through the steps of how they diagnosed the issue, including auditing their Sitemaps files, correcting them, still not seeing sufficient indexing improvements, and eventually contacting Google through their Google Site Search relationship. Google found that most of the sample pages they were using to diagnose the issue had been crawled near the time of the drop in indexing and had been found to be duplicates of their site support registration page.

After a series of steps, including cleaning up some Panda-related issues here and there and submitting a reconsideration request, the pages eventually were reindexed.

They theorized that the likely culprit was a non-ideal server status code during a maintenance period when the pages were unavailable. She recommends that one not use a 302 redirect or 500/504 status code during such events, but instead use a 503 status code, which means “service unavailable.â€

As takeaway learnings from this case, Christine said that some servers will respond with 503 status codes during upgrades (such as WordPress), but others — like Apache, IHS (IBM), IIS (Microsoft) — would require modification of their rewrite rules. Another option for those using Akamai’s content delivery network would be to ask Akamai for an assist in reflecting back the 503 service codes.

Case #2

In her second case study, Christine related an incident where IBM had installed a new page interface that was built of dynamic “cards†which were delivered by AJAX/Javascript. They discovered that pages linked to from the cards were not getting indexed by Google.

After some sleuthing, it was determined that the directory that stored the Javascript that rendered the cards was disallowed by robots.txt. They resolved this. However, they also observed that the URLs that were visible on page load in their “Featured†section of the page were indexed by Google, while hidden URLs were not.

She also provided a further caution: Baidu and Yandex do NOT process Javascript, so this interface likely wouldn’t work for them without some alternative content.

Case #3

In her third case study, Christine described how their Smarter Risk Journal had been moved, but they discovered a problem: the logic that created the canonical URLs was flawed — each article didn’t have a proper canonical URL, it was the URL of landing page, effectively signaling that all pages were dupes. They corrected the logic, but still had to work further when they discovered that some URLs that had special character encoding caused errors for some browsers. They tested further and corrected as necessary.

Christine finished with a summary of recommendations for things to do to diagnose and avoid errors:

- Check canonical URLs

- Check robots.txt

- Check redirects

- Verify sitemaps

- Use 503 service Unavailable HTTP responses during site maintenance

Overall, the Advanced Technical SEO session was interesting and informative. Anyone that has been involved with development and search engine integration for enterprise websites with many thousands of pages knows that complex and not-well-documented situations can arise, and it’s really helpful to hear about how other professionals go about diagnosing and addressing things like this that can impair performance. This provided me with a few more tools and solutions for the future.

Some opinions expressed in this article may be those of a guest author and not necessarily Search Engine Land. Staff authors are listed here.

Be a part of the world’s largest search marketing conference, Search Engine Land’s SMX East. The robust agenda covers the latest tactics in paid search, SEO, mobile, analytics and more. Register today and save $300, or come as a team and save 10%-20%.

About The Author

(Some images used under license from Shutterstock.com.)

Article source: http://searchengineland.com/latest-advanced-technical-seo-smx-advanced-222971