This is part 2 of 2 in a series of articles where I am laying out a vision to bring more objectivity to SEO, by applying a search engine engineer’s perspective to the field. If you haven’t yet, please read up on the first of this series, where I present a step-by-step way to statistically model any search engine.

In this installation, I will show you how to utilize your new search engine model to uncover exciting opportunities that have never been possible before, including accurately predicting when you will pass your competition (or when they will pass you!), precisely forecasting your traffic/revenue months before it happens, and even efficiently budgeting between SEO and Pay-Per-Click (PPC), solving the “Traveling Salesman Problemâ€.

Full Disclosure: I am the Co-Founder and CTO of MarketBrew, a company that develops and hosts a SaaS-based commercial search engine model.

So What? What Can I Do with This Thing?

For an outsider to the SEO industry, or even a conventional CMO who hasn’t been exposed to newer technical SEO approaches, this is certainly not a stupid question. In part 1 of this series, you learned how to build a search engine model and self-calibrate it to any search engine environment.

So now what? How does one benefit from having this technical capability?

Well, it turns out that since you have a model, you now have the ability to “machine learn†each local search engine environment’s quirks (and fluctuations in algorithmic weightings). This can really help you predict what will happen in that environment, even when we make the tiniest of changes. We call this statistical inference.

This statistical inference gives you both a tremendous financial and operational advantage, when it comes to SEO (and even budgeting and targeting Pay-Per-Click, as you will learn shortly).

Here are just some questions this approach can solve:

- How much optimization do I have to do, to pass my competitor for a specific keyword?

- When and will my competitor pass me in ranking for a specific keyword?

- Which specific optimizations will cause the biggest shift in revenue? I’ve got 1,000 top keywords and and hefty number (1M+) of web pages, how do I solve for this? And which optimizations should I avoid because they are pitfalls?

- Which keywords should I be targeting with Pay-Per-Click (PPC) and which should be targeted using SEO? Are there keywords where I should absolutely be targeting both?

Developing a Real-Time 60-Day Forecast of Your Traffic (And Revenue)

First things first, the most important benefit of this approach is that it can accurately predict search results months in advance of traditional ranking systems, because traditional ranking systems are based on month’s old scoring data from their respective search engine.

Remember our model, once calibrated, is now decoupled from reality. You can run changes through it, and shortcut all of the lengthy processing and query layer noise that you are subjected to when using standard ranking platforms.

For example, on a standard ranking platform, rankings are reported as they show up on the search engine, naturally. Some ranking platforms will tell you that they update every week (or even every day!) but all that is measuring is how synchronized they are with Google or whatever search engine. However, that search engine data and its many generations of scoring are inherently months old — having been through the process of crawling, indexing, scoring, and shuffling with thousands of other parallel calculations to other web pages.

With your own search engine model, you can easily simulate changes, instantly. Need to test out a change and see what will happen to your traffic and/or revenue once the search engine has had the time to process and integrate this new data into its search results? No problem, fire up the model, test, repeat, test, confirm, and push to production.

The Perfect Set of Moves is Right There. You Just can’t See it, Yet.

Those heading up their Fortune 1000 SEO division are responsible for trillions of potential combinations of keyword / web page optimizations that, if made in the right order, will determine their future career success (or failure). No wonder SEO carries such personal emotions for so many of its practitioners.

“In chess, as it is played by masters, chance is practically eliminated.†— Emanuel Lasker

However, with a highly correlated statistical model of their target search engine environment, the series of “right moves†is more about their execution than mystery. Much more controllable = much more sleep for SEOs and their managers.

To accurately and precisely determine WHERE to start optimizing, we must solve a very big computer science problem called The Traveling Salesman Problem. The Traveling Salesman Problem asks:Â Given a list of cities and the distances between each pair of cities, what is the shortest possible route that visits each city exactly once and returns to the origin city?

Remember, you cannot simply point out one keyword/web page combination that might benefit from a particular isolated optimization. Why? Because it could actually have an overall negative effect on your traffic/revenue! Instead, each pairing and combination of optimizations must be solved with respect to each other. This is very similar to The Traveling Salesman Problem, with a couple of twists.

In our case, the “cities†are optimizations or “changes to the websiteâ€, and the “distances†between those “cities†are represented by the amount of effort it takes to implement those optimizations.

We now have a well-defined computer science problem, and a very straight analogy to create our inputs. How do we determine the “effort†it takes to carry out each proposed optimization? With your new search engine model, it’s actually quite simple and straightforward.

First, we need to measure each optimization’s benefit and cost. Those ROI calculations, or “roads between cities†gives us the “cost†of implementing each optimization.

Creating the ROI Model

Before we determine what are the “right†optimizations, we first must define what is “right†— in this instance, we are going to be defining success by either traffic, revenue, or both.

To find the highest ROI moves, we will need to measure traffic/revenue increase verus amount of optimization needed (cost of optimization). This turns out to be a perfect problem for our new search engine model.

To measure this, the search engine model must:

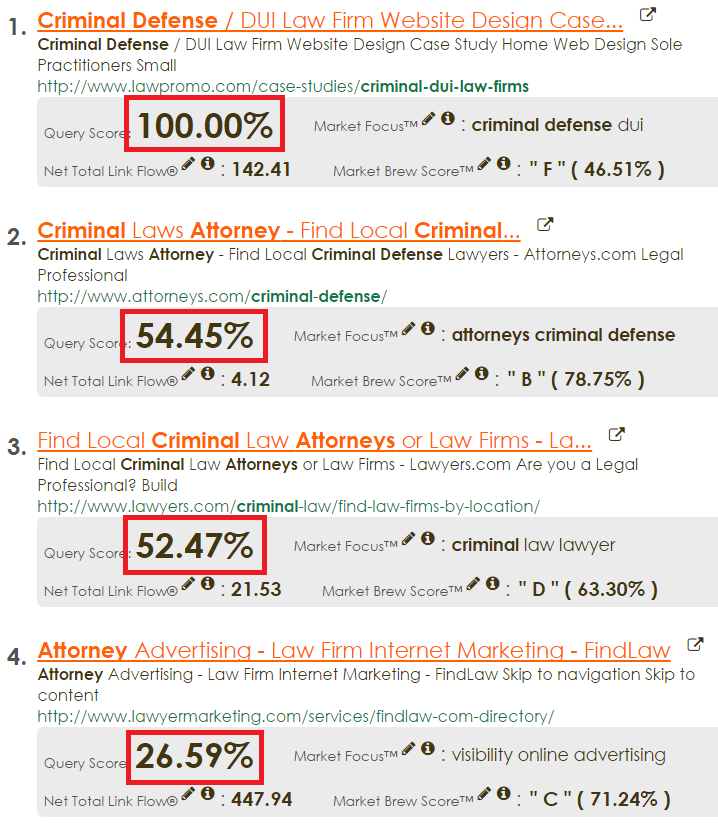

- Determine ranking distances of each search term, including the overall query score and all individual scores that go into the overall query score.

- Simulate all possible ranking changes for every web page / keyword combination.

- Order simulations by most efficient (i.e. least amount of changes for most gain in reach).

- Recommend best path of optimizations / tasks, based on user preferences, taking into account the classical Traveling Salesman Problem.

Step 1: Determine Ranking Distances

Here’s where our transparent search engine model leaps into action. Not only can you see the order of the search results for a particular query, but you can also see the distances between those results, and how those distances were calculated.

Knowing the distances between search results, in terms of query score, is an important step towards automating SEO. We can now define the cost / work that each optimization requires, and begin to simulate all possible moves with great precision.

Step 2: Simulate All Possible Ranking Changes

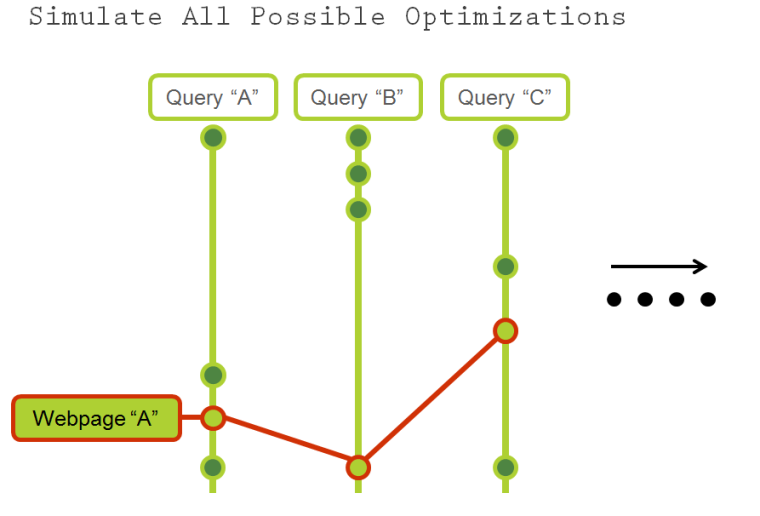

Once your search engine model has established a highly correlated environment, it can begin to simulate, for each web page and query, all the possible optimizations and subsequent ranking changes that can be made.

For each web page, for each query, simulate ranking changes

Within each simulation, we first determine how much efficiency there is within each ranking change. To determine efficiency, we must create a metric, for each simulated ranking change, that allows us to see how much a particular web page stands to gain in terms of reach versus how much it must increase its query score (i.e. cost) to gain that additional reach.

Reach Potential (RP)

Article source: http://www.searchenginejournal.com/technical-future-seo-part-2/132481/