What in the world could Google’s SafeSearch possibly teach us about becoming better digital marketers?

Turns out, a whole lot, because it perfectly illustrates how algorithms are used to automate search and those same principles used by Google can help PPC pros improve their game.

When Google automates nearly every aspect of search marketing, you don’t need to hope for the best while you sit idle on the sidelines, but rather you can deploy ‘automation layering’ to stay in control.

The concept of automation layering I believe is fundamental to PPC success in an ever-more automated world.

SafeSearch: Algorithms + Humans

Let me start with an anecdote of when I was involved in SafeSearch back in the early 2000s, shortly after joining Google.

If you’ve heard me speak at PPC conferences, you already know why Google hired me: they needed a native Dutch speaker to work on AdWords.

Since I grew up in Belgium speaking Flemish (the Belgian version of Dutch) and I lived in Los Altos just a few miles from where Google was starting its campus after outgrowing their space in Palo Alto, I was interviewed for the position.

Because the other native Dutch speaker they interviewed insisted on getting a private office and an admin, they quickly decided I might be the better fit.

I started work at Google, first translating AdWords in Dutch, then reviewing all Dutch ads and doing email support for customers in Belgium and the Netherlands.

One day, while I’m reviewing Dutch ads in the ‘bin’, a search engineer walks over to my cubicle with a stack of printouts and drops it on my desk. One look at the list and my jaw drops at some of the words that were on there.

The engineer tells me it’s a ranked list of pornographic searches. Their algorithm had already ranked those searches from most to least pornographic and now they just needed to figure out where to set the threshold for SafeSearch.

They wanted me to read through the list and draw a line where the searches went from a query definitely looking for porn, to one that could be construed as porn, but also something else.

I used my red marker to draw the line and that’s how the Dutch SafeSearch threshold got set: machine algorithms and Fred with his red pen.

What I learned from this experience was that algorithms are good at calculating similarities between things.

By assigning a numerical value to each query, they can rank them on different dimensions, like how pornographic they are.

But a human was needed to look at the ranked list to determine a reasonable threshold that fit the business criteria of what the SafeSearch feature was intended to do.

Thresholds in PPC

It then dawned on me that Google uses thresholds in hundreds of places.

- There is a threshold for when an ad’s quality score is high enough to make it eligible to appear above organic results.

- There is a threshold for when ad experiments can be concluded.

- There are thresholds for when a query is similar enough to a keyword to show an ad.

- And so forth.

If Google uses so many thresholds, we advertisers should also think about how to recreate these systems so we can set our own thresholds.

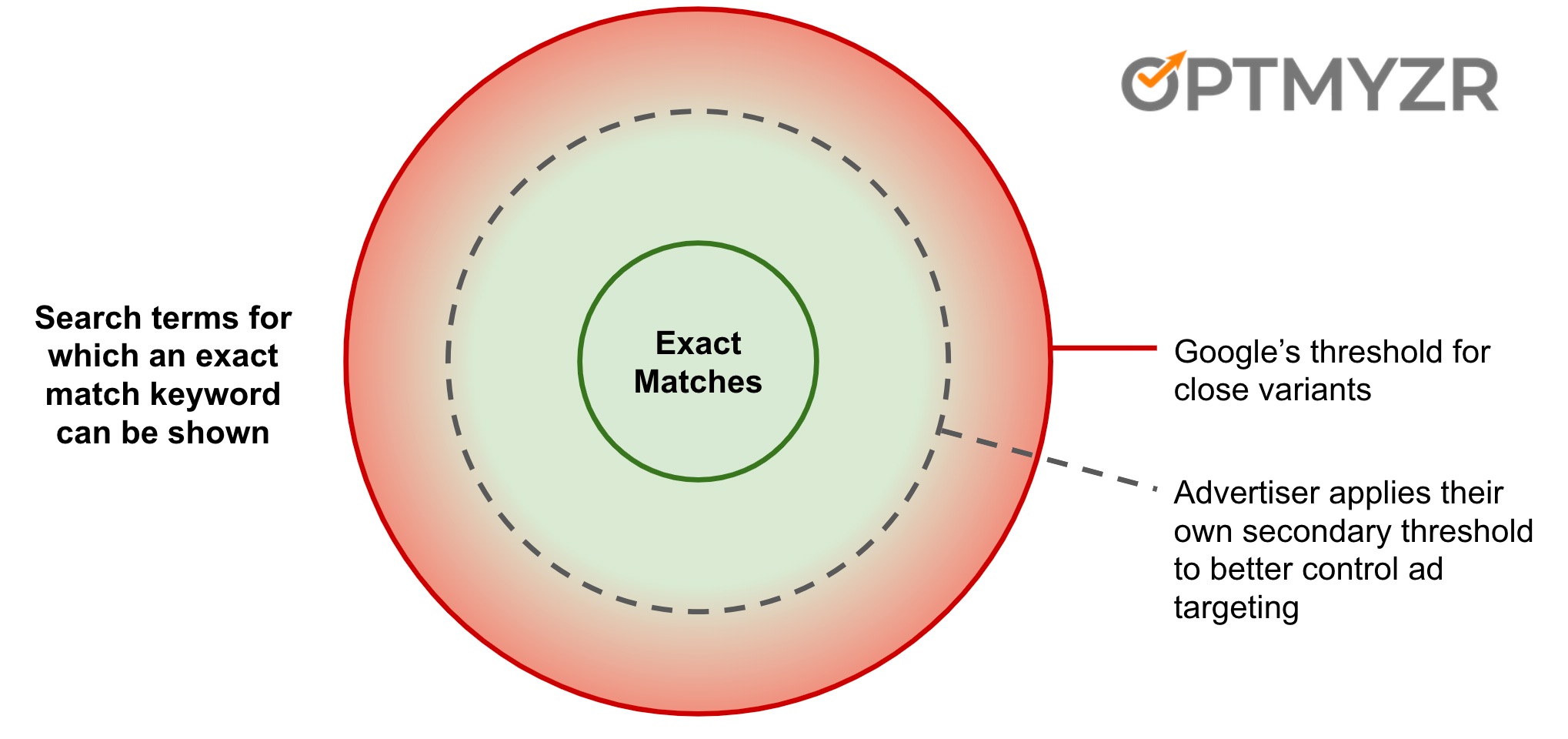

Google uses a threshold to determine when a query is close enough in meaning to be considered a ‘close variant.’ Advertisers can set their own lower threshold using their own automation to get more control over when their ad is shown.

The same algorithmic ranking happens every time an ad auction is run on Google. It happens in many forms, but one of the easier ones to understand is keyword matching.

Think for example about Google’s recent expansion of “close variants” where exact match keywords are no longer exact, but can also include searches that have the same meaning or intent.

When a user searches for something that is not exactly the same as the advertiser’s keyword, Google’s algorithms calculate a score of how likely that search indicates “same meaning” or how close the search is to the keyword.

The machine returns a score and if that score is above some threshold, the ad is eligible to be shown.

In general, these automations mean advertisers get more results with less work. They no longer need to figure out every possible variation of every keyword, but instead let Google’s state-of-the-art machine learning systems figure it out.

I’m a fan of this type of automation, perhaps because when we looked at our own data for close variants, the vast majority of them were typos of our company name, Optmyzr.

I think we may be giving Britney Spears a run for her money in terms of how many ways our respective names can be misspelled (~500 for Britney in case you’re curious).

But there is always a level of hesitance to using these sorts of black-box systems because it’s impossible to predict what they will do for every possible scenario. That worries advertisers who are used to tremendous control over targeting their ads on search.

Automation Layering to Set Your Own Thresholds

This is where automation layering comes in.

There’s a false assumption that Google is the only one in control when an advertiser enables one of their automations. In this case, the automation is the close variant version of keywords, something that has no off-switch.

The reality is different though: advertisers have some level of control.

For example, they can add negative keywords when they find Google is showing their ads for a close variant that they don’t like.

The problem is that manual monitoring of automations is incredibly tedious and time-consuming. It probably isn’t worth the effort for the possible improvement in results.

But what if advertisers could automate their own process and combine it with Google’s automation?

That is automation layering.

In the close variants example, here’s one way to use automation layering. You use a rule engine (Optmyzr offers one) or a script that identifies close variant search terms and applies an algorithm to rank these.

In another post, I explained that advertisers can use a Levenshtein distance score to calculate how different a search term is from the matched keyword.

Levenshtein distances of 2 or 3 are usually typos. Anything longer than that often is more than just a typo and may be worth a closer look:

- Either as an idea for a new keyword.

- Or possibly something to exclude by adding a negative keyword.

Now that the advertiser has their own numerical score, they can draw their own line and set a threshold they think makes sense for their business.

Google uses its threshold to show ads more often, and the advertiser layers on their threshold to show ads less often.

When the two automated thresholds are combined, advertisers benefit from showing their ads in scenarios where they didn’t have the keyword. They also benefit from tighter control over the relevance between the query and keyword.

Bottom Line

The point of my work on SafeSearch and my example about close variants is not just to explain that advertisers can control close variants, but to illustrate some ideas that are paramount to a successful future in PPC.

Most advertisers agree that humans + machines are better than machines alone, but humans don’t necessarily have the bandwidth to manually monitor all the new automations the engines keep introducing.

Thus, it makes sense that humans might want to find ways to create their own automations to layer on top of those of the engines.

Ultimately, we just want a say in how aggressive automations should be and that’s where thresholds come in.

Only by layering our own automations can we have control over the thresholds that Google doesn’t expose.

And because most advertisers aren’t technical and can’t write their own automations in code, I am a firm believer that prebuilt scripts, rule engines, custom monitoring and alerting systems, and the like will be critical tools in the arsenal of successful PPC managers in 2020 and beyond.

More Resources:

- Google Ads Automation Layering with Frederick Vallaeys [PODCAST]

- 4 Things That May Surprise You About Automated PPC Bidding

- 3 Ways to Automate Google Ads Without Knowing How to Code

Article source: https://www.searchenginejournal.com/google-safesearch-ppc/329577/